Processor hardware, it seems, is having a middle-age crisis.

With the International Technology Roadmap for Semiconductors no longer using Moore’s law as a standard for improvement, integrated circuit hardware will be adopting new paths towards progress.

Hardware’s issues

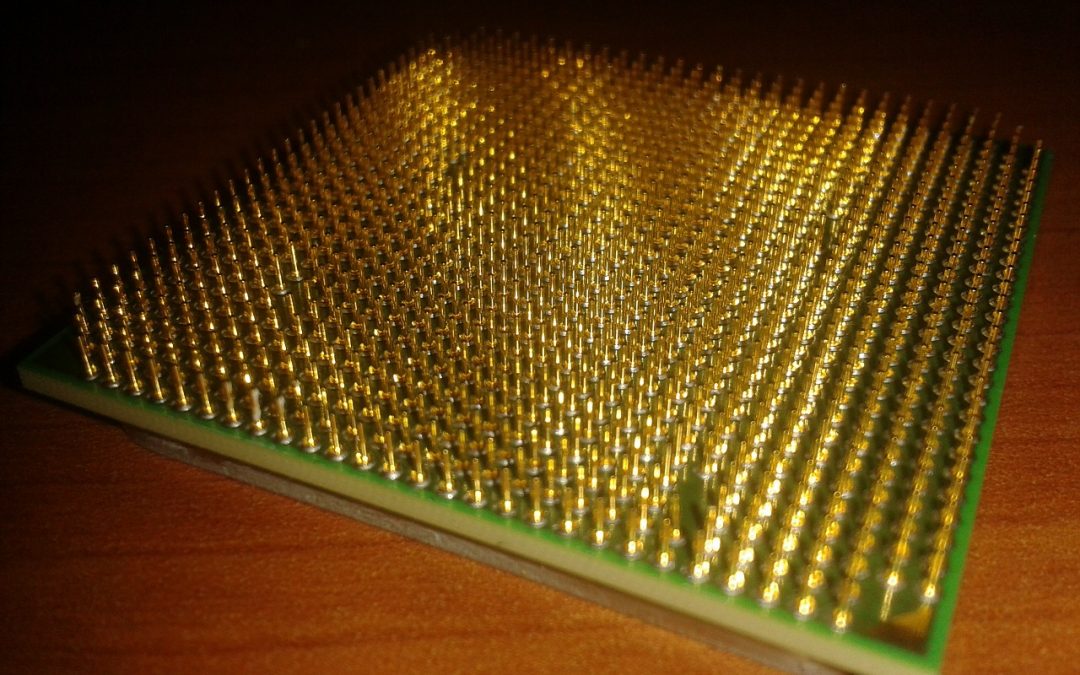

It has become increasingly difficult to simply keep pushing clock speeds and putting more transistors on a chip. Yet new technologies (geometric scaling, multiple cores) solved the component density problems and pushed performance further. 10nm cores and 16 or even 32 core processors are in the pipeline.

A big problem with both technologies, though, is heat dissipation. More components per square centimeter and overall cause problems with heat. Overcoming this requires innovations and better application of heat dissipation technologies

Taking an alternative approach

Consider the following milestone: the multi-core CPU in an iPhone 6s is several orders of magnitude faster than the first server all of Adept ran on. There is no doubt that the cost efficiency, size and power efficiency of computer processors and computer memory have all increased to a point where they are everywhere. Computing is becoming distributed on a scale we haven’t seen before. On top of that, software runs on everything. The Internet of Things have pretty much dictated that this is the direction we will be taking, after all.

So while Moore’s Law may technically be dead, the trend continues, albeit along a separate line. We have more and more and more computing for less and less and less money. Which is, although not the definition, was the spirit of Moore’s Law

Thinking ahead on energy

With regards to data centers, we really need to care about energy and heat generated by hardware. There is little doubt that energy is going to form the next tremendous drive for sourcing and supply.And the massive growth in the smart phone supply chain is going to impact that in future.

The ARM chips we use in mobiles are starting to move into the data center. It happens because they are both energy-efficient (consider smartphones) and run the software that powers most of the Web (Linux).

This may drive equipment to become smaller and therefore denser, less power-hungry and ultimately cooler. The net result? More computing per server rack for less power, and requiring less cooling. Using that capacity requires one thing software had traditionally been terrible at, which is parallelism. Thankfully, the last decade has seen massive advances in that regard (think about massively scalable platforms such as Twitter).

The author interviewed Gideon le Grange, Technical Director, in consultation for this article.